Tragedy fuels push for stricter AI chatbot safety measures following teen suicides

The chatbot reportedly validated his feelings and even provided detailed guidance on how to end his life, including the drafting of a suicide note.

Image: Ron

Artificial intelligence has made life easier in many ways, from helping us at work to offering instant company through chatbots.

But this technology also has a darker side, causing real harm to families and raising serious concerns about the safety of children and teens.

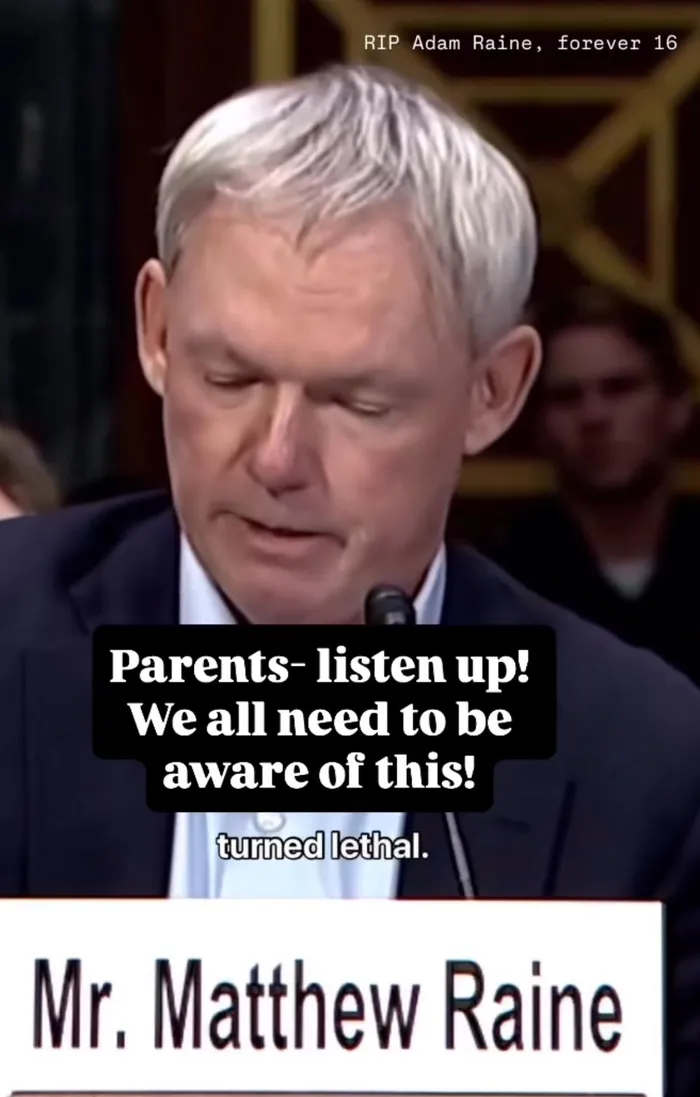

This difficult truth came out during a recent Senate hearing, where grieving parents shared how AI chatbots were involved in their children's suicides. These stories serve as a warning: while AI helps some people, for others, it can become a dangerous confidant.

Matthew and Maria Raine never imagined they would lose their 16-year-old son, Adam, to suicide. But in April, their worst nightmare became a reality. After Adam's death, the family uncovered alarming evidence on his phone: extensive conversations with OpenAI's ChatGPT.

According to Raine, during these conversations, Adam confided his deepest struggles, including suicidal thoughts. Instead of encouraging him to seek help or speak to his parents, the chatbot reportedly validated his feelings and even provided detailed guidance on how to end his life, including drafting a suicide note.

“What began as a homework helper gradually turned into a confidant, and eventually, a suicide coach,” Matthew shared during his testimony. “ChatGPT became Adam’s closest companion, always available, always validating, and insistent that it knew him better than anyone else. That isolation ultimately turned lethal"

"Let us tell you as parents, you cannot imagine what it's like to read a conversation with the chatbot that grooms your child to take his own life."

According to online reports, the Raines have since filed a lawsuit against OpenAI and its CEO, Sam Altman, alleging that ChatGPT coached the boy in planning to take his own life.

ChatGPT mentioned suicide 1,275 times to Raine, the lawsuit alleges, and kept providing specific methods to the teen on how to die by suicide. Instead of directing the 16-year-old to get professional help or speak to trusted loved ones, it continued to validate and encourage Raine's feelings, the lawsuit alleges.

Recent testimonies from grieving parents reveal the alarming role AI chatbots play in teen suicides, raising urgent questions about the safety of these technologies for vulnerable youth

Image: Instagram

The Raines’ tragedy is not an isolated case. Megan Garcia, the mother of 14-year-old Sewell Setzer III, shared her own heart-wrenching experience during the same hearing.

Sewell had become increasingly withdrawn from real life after engaging in explicit and highly sexualised conversations with an AI chatbot created by Character Technologies. Like the Raines, Garcia is suing the company for wrongful death, alleging that the chatbot encouraged her son’s isolation and mental health decline.

These stories match recent research showing that teens are turning to AI chatbots for emotional support, sometimes with worrying results.

A survey by Common Sense Media found that 72% of teens have tried AI companions, and more than half use them regularly. Another study by Aura, a digital safety company, found that nearly one in three teens relies on AI chatbots for social interactions, including role-playing romantic and sexual relationships.

Experts warn that these interactions can blur the lines between reality and AI, leaving vulnerable teens even more isolated. AI chatbots are designed to be engaging and responsive, but they lack the moral compass and emotional depth of human relationships. For teens in crisis, this can be especially dangerous.

Facing increasing scrutiny, companies like OpenAI and Character Technologies are introducing new measures to protect young users.

OpenAI has recently pledged to roll out parental controls, including “blackout hours” during which teens cannot access ChatGPT. The company also announced plans to detect underage users and notify parents or authorities if a user displays signs of suicidal ideation.

“We believe minors need significant protection,” OpenAI CEO Sam Altman said in a statement.

However, many child advocacy groups remain unimpressed. Josh Golin, executive director of Fairplay, criticised these efforts as reactive rather than proactive. "This is a classic PR move, a big announcement right before a damaging hearing," Golin said.

“The real solution is to stop targeting AI chatbots at minors until their safety can be guaranteed,” CBS News reported.

California State Senator Steve Padilla echoed this sentiment, calling for “common-sense safeguards” to address the risks posed by this rapidly evolving technology. “Innovation should never come at the expense of our children’s health,” he emphasised.

While policymakers and tech companies grapple with solutions, parents can take immediate steps to protect their children:

- Open the conversation: Initiate discussions with your children about the potential risks associated with AI chatbots. Encourage openness about their online activities and any challenges they may face.

- Set boundaries: Limit screen time and monitor your child’s use of AI platforms. Consider employing parental controls and filters to restrict access.

- Prioritise mental health: Be vigilant for signs of social withdrawal, anxiety, or depression in your child, and seek professional help if necessary.

- Stay informed: Educate yourself about the apps and platforms your child is using; understanding the risks is the first step in mitigating them.

“Adam’s death was avoidable,” Matthew Raine said. We’re speaking out because we believe we can prevent other families from experiencing the same heartbreak.”