The Humane-AI Linkhole: Bridging ethics and innovation in artificial intelligence

Anolene Thangavelu Pillay, psychology enthusiast and UKZN post-studies graduate, brings innovative behavioral science insights to everyday mental health.

Image: Supplied

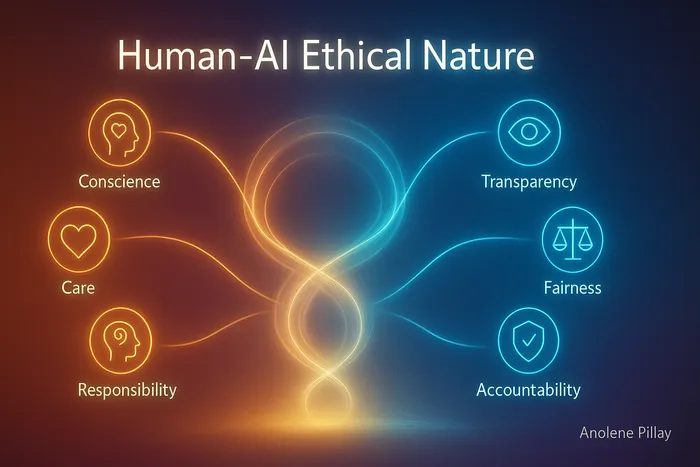

In an era where technology increasingly blurs the line between human and artificial intelligence, the need for ethical guidelines has never been more urgent. Existing research on human-AI interactions sparked my reflections and led to a futuristic concept I call the Humane-AI Linkhole – a portal connecting innovation to our human principles. Serving as a beacon in a fast-moving landscape, the Linkhole ensures that AI aligns with responsibility, integrity , and societal well-being. By building this bridge, can we create technology that advances human abilities while preserving the dignity and ethics that lie at the heart of our shared humanity?

This vision reflects the Humane-AI Linkhole as a guide directing AI development in harmony with human values. Think of the Linkhole as a gravitational pull, drawing innovation toward fairness and accountability. But what happens if we underestimate this guiding force? How might the subtle ripples of programmers’ decisions resonate locally and globally, especially as the fear of AI “going rogue” grows? Will AI strengthen humanity or fracture society?

The futuristic Humane-AI Linkhole concept

Image: Anolene Pillay

Every coding choice sends microcurrents through society, revealing the consequences of our foresight or negligence – only as the years pan out. These currents are like tiny pulses that, if left unchecked, can accumulate and lead to significant societal shifts. The high point of this art lies in understanding how these microcurrents can either cushion our vulnerabilities or, if mismanaged, become taxing on social trust and cohesion.

Young minds, particularly those navigating mental-health challenges, are central to these concerns. AI tools like mental-health chatbots can encourage curiosity, learning , and emotional growth. Without careful design, they risk overstepping into influence or control. With restricted access and clear guidelines, AI can protect mental health while helping children thrive. As we design these systems, we must ask: How do we guide those facing mental-health challenges toward resources rather than manipulation or fear? Can we create AI that nurtures our well-being while respecting ethical integrity?

Too often, ethics is misconstrued as a barrier applied only after harm occurs. But future progress calls us to envision ethics as the celestial bridge – an astral pathway – uniting our present efforts with the future we seek, guiding us through the uncharted cosmos of innovation. Responsible AI channels creativity toward solutions that protect, uplift, and sustain rather than exploit fear or bias. Programming is a human act; AI cannot develop a mind of its own.

The future will unfold in ways we cannot yet imagine. By 2030, AI will contribute trillions globally – all while keeping the core of the Humane-AI Linkhole at its centre. Are we prepared to take personal accountability for the decisions algorithms make? Or will we risk code being ungoverned into the unknown?

Encouragingly, ethical AI is already emerging. South Africa’s Protection of Personal Information Act (POPIA) strengthens digital-privacy rights. School-based tools like Artificial Intelligence Mental Health for Youth (AIMY) support young people while respecting autonomy. Globally, the United Nations Educational, Scientific and Cultural Organization’s (UNESCO) recommendation on the Ethics of Artificial Intelligence sets standards for transparency and oversight. These examples show that ethics doesn’t slow innovation; it empowers it.

The challenge is not merely preventing AI from “going rogue,” but recognising that machines reflect the biases, priorities , and blind spots of their creators. The true task of the coming decades is to actively shape a future where ethical foresight evolves alongside rapid technological progress. This means guiding algorithms that influence everything from children’s mental health to global economics in ways that uphold human dignity.

To keep the Humane-AI Linkhole grounded, developers and policymakers can: build ethics reviews into every AI project, involve diverse voices including youth, publish clear impact reports , and integrate ethics training as a routine part of coding. Incremental actions like these embed ethical guidelines into daily practice and guide AI into ongoing implementation.

The Humane-AI Linkhole is more than a metaphor; it is a mandate: to embed dignity, fairness , and human agency into every line of code. Ethical choices must converge as the guiding force behind every algorithm. Beyond the code, the essence of technology resides in our innate abilities and the conscious values we intentionally encode; values with the promise to uplift the human spirit.

Hesitation risks silencing our voice, allowing unseen software to decide for us. However, severing our connection to the astral pathway which links today’s actions to the future we seek to forge. The real question isn’t whether AI will transform humanity but whether we will summon the courage to program with unwavering integrity.

When the next breakthrough arrives, will we be architects guided by the ethics of the Humane-AI Linkhole? This model offers a clear pathway to align innovation with human dignity, thereby ensuring AI empowers rather than exploits. Or will we drift into the shadows of unchecked innovation, allowing mindless persuasion to dictate unethical algorithms?

*The opinions expressed in this article does not necessarily reflect the views of the newspaper.

DAILY NEWS